Balancing Innovation and Risk in AI: The Strategic Alignment Matrix

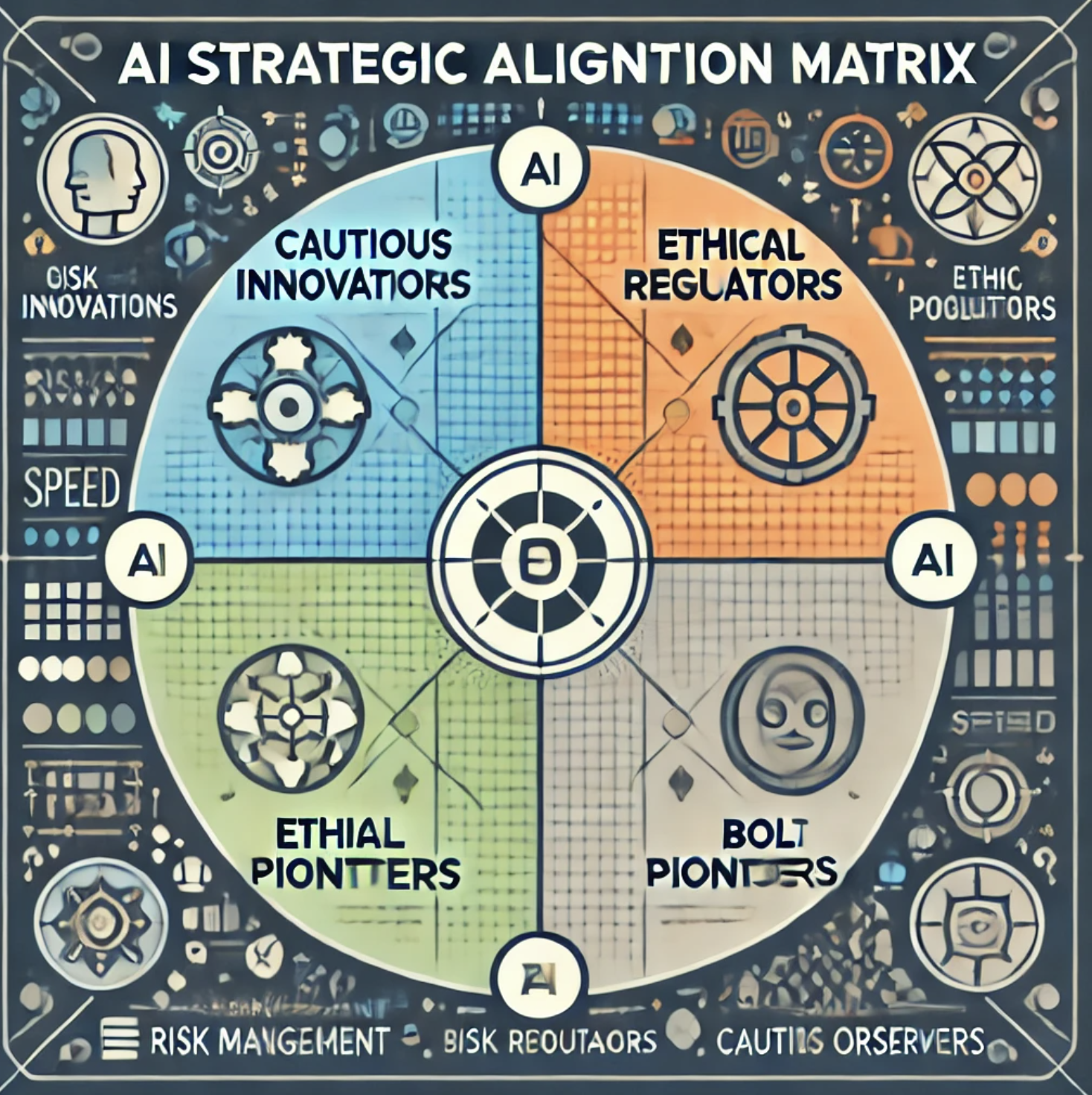

TL;DR: This article introduces the “AI Strategic Alignment Matrix,” a two-by-two framework that helps companies navigate the global landscape of AI regulation. The matrix divides strategies into four quadrants: Cautious Innovators (high concern for risks, aggressive AI development), Ethical Regulators (high concern for risks, cautious development), Bold Pioneers (low concern for risks, aggressive development), and Cautious Observers (low concern for risks, cautious development). Understanding your company’s position in this matrix can help align your AI strategy with the regulatory environment, ensuring both innovation and compliance.

Fast? Slow? Crazy AI Regulations? What should we do?

You may have noticed the growing interest in generative AI across the globe, with countries responding in vastly different ways. Some are racing ahead, eager to capitalize on the technology, while others are imposing strict regulations to manage the risks. These varying approaches have significant implications for the future of AI. In this article, I’ll explore how to balance the need to manage these risks with the desire to win the race to achieve artificial general intelligence (AGI). To help navigate these complexities, I’ll introduce the “AI Strategic Alignment Matrix” a two-by-two matrix that will guide our thinking as we examine these different dynamics.

A Rational Approach to Crazy AI

Let’s explore the two key dimensions that are being weighed in the global response to generative AI. The first dimension is the concern for the risks and harms that AI might pose. As highlighted in the documentary [The Social Dilemma]( https://en.wikipedia.org/wiki/The_Social_Dilemma) , the Internet brought about many unintended consequences that we are still grappling with today—misinformation, the rise of the attention economy, and its impact on our politics and society. The formation of theCenter for Humane Technology underscores the ongoing battle to address these issues and educate the public about the potential dangers of new technologies. With AI, many people are keenly aware of these past lessons and are focused on preventing similar, or potentially more massive, unintended harms as this powerful new technology emerges.

The second dimension is the pursuit of artificial general intelligence (AGI). This is where the question of speed and aggressiveness comes into play. How quickly should we advance toward AGI? For many, it’s seen as an arms race—a race that must be won to secure technological, economic, and geopolitical power. The fear is that if one country or company doesn’t push forward aggressively, they will be left behind, potentially becoming economically disadvantaged or even irrelevant on the global stage. This belief fuels a drive to innovate and move rapidly, with the idea that the ends justify the means.

These are the two dimensions we’ll be discussing throughout the rest of this article as we examine how different countries are approaching these challenges. It’s a valuable thought exercise, not just on a global scale, but also for you as you consider what’s right for your company. What’s the best approach for your industry, your position in the global economy, and the broader ecosystem? I hope this framework offers you some insights, as it certainly helped me organize my thoughts while writing this article.

Cautious Innovators

Let’s start by examining the first quadrant: Cautious Innovators. Entities in this category are driving forward with AI innovation while maintaining a strong focus on managing risks and ensuring safety. They are characterized by a high concern for potential risks, yet they remain aggressive in their AI development efforts. California serves as a prime example of this approach. The state has introduced stringent regulations in an attempt to balance the tension between fostering innovation and managing the associated risks. California Privacy and AI Legislation Update: August 26, 2024

This approach has sparked debate, with some arguing that the regulations are not strong enough to address potential harms, while others claim they are stifling innovation. The fact that there is significant discourse on both sides indicates that California is actively trying to navigate this delicate balance in crafting its laws. The outcome of this balancing act is still up for debate, but it’s clear that California is attempting to lead in a way that mitigates risks without entirely halting progress.

For businesses, the impact of operating within this quadrant depends largely on their own strategic inclinations. If your company naturally aligns with the Cautious Innovators quadrant, you might find California’s approach fitting and manageable. However, if your company aligns more with one of the other quadrants, the regulations may feel restrictive and challenging.

I strongly encourage you to reflect on which quadrant best represents your company’s approach. Understanding your own perspective, as well as the perspectives of entities in other quadrants, will help you develop a strategic approach that aligns with your goals and the regulatory environment you operate in.

Ethical Regulators

Next, let’s explore the Ethical Regulators quadrant. Entities in this category exhibit a high concern for risks but take a more cautious and measured approach to AI development. Their primary focus is on ethical considerations and safety, often prioritizing these factors over rapid innovation. The European Union is a prime example of this approach.

The EU has drafted and enacted a series of laws that place a strong emphasis on risk assessment. In the EU’s view, if an AI system potentially infringes on the rights of its citizens, it is considered high risk and must be deployed in a manner that both acknowledges and mitigates those risks. This approach reflects a clear bias toward risk mitigation, ensuring that AI is developed and used in a way that protects citizens’ rights.

While the EU is not opposed to AI development, its support is primarily for AI applications in low-risk scenarios. The focus is on controlled, thoughtful growth rather than unfettered innovation. The EU’s approach underscores a commitment to ensuring that AI serves the public good and does not come at the expense of individual rights or societal welfare. The EU Artificial Intelligence Act

Bold Pioneers

Let’s now turn to the Bold Pioneers quadrant. Entities in this category adopt a bold, aggressive approach to AI development, prioritizing speed and innovation with minimal concern for potential risks. Japan’s strategy in this area has been surprising to many in the international community. The country has opted for minimal government intervention, allowing businesses to set their own standards and drive AI development forward at a rapid pace.

This approach appears to be a response to Japan’s prolonged economic stagnation, with AI being seen as a potential catalyst for economic revitalization. By embracing a high-speed, low-regulation environment, Japan is betting that bold innovation will be the key to securing a competitive edge and rejuvenating its economy. Whether or not this strategy will pay off remains to be seen, but it is clear that Japan has committed to a path where the pursuit of technological advancement is prioritized, and the potential rewards are seen as worth the risks involved. [Japan’s Strategy for Building a Robust Domestic AI Ecosystem

Cautious Observers

Finally, let’s explore the Cautious Observers quadrant. Entities in this category exhibit both low concern for risks and low aggressiveness in AI development, adopting more of a wait-and-see attitude. The U.S. federal government seems to fall squarely into this quadrant. Its approach to AI regulation has been relatively hands-off, with limited and slower-paced regulation compared to other parts of the world. The U.S. has not been at the forefront of drafting AI regulations and appears likely to be among the laggards in this area.

This could be attributed, in part, to the political landscape, particularly during an election year. However, it’s important to recognize that the U.S. government’s bureaucratic processes and policy-making often take years, if not decades, to develop fully. The decision to take a slower, more deliberate approach in regulating AI may have far-reaching implications both domestically and on the global stage.

One significant concern for U.S.-based companies is that this wait-and-see attitude could lead to inconsistent regulations across states, creating complications and uncertainties for businesses operating nationally. Additionally, this cautious stance may put the U.S. at a competitive disadvantage globally, as other countries push forward more aggressively with AI development and regulation. The risks of being a laggard in AI regulation are not just domestic but could also impact the U.S.’s standing in the international arena.

Strategic Implications for Companies

The two-by-two matrix presents several strategic implications for companies. First and foremost, it’s crucial to understand your own natural inclination—identify which quadrant aligns with how you prefer to operate. Then, compare that to the environment in which you actually find yourself. The greater the gap between your natural inclination and the current operating environment, the more challenging it will be to adapt. If your preferred quadrant is adjacent to your current environment, the shift may be manageable. However, if your preferred and actual environments are diametrically opposed, adapting could require a significant effort.

For companies operating in high-regulation environments, it’s essential to make robust AI compliance and risk management a core competitive advantage. You need to develop a deep understanding of the regulatory landscape and ensure that your business is equipped to navigate it effectively. Investing in risk management, transparency, and compliance will not only help you meet regulatory requirements but also enable you to outmaneuver competitors who may struggle with these demands.

On the other hand, for companies in low-regulation, high-speed environments, the focus should be on prioritizing innovation and agility. However, it’s important to anticipate future regulations and potential challenges that may arise as the industry evolves and new risks are identified. It’s also worth asking whether your current pace aligns with your company’s ethical standards. Even in a fast-paced environment, you might need to intentionally slow down at times to avoid rushing headlong into unforeseen risks that could jeopardize the entire company.

In essence, whether you’re in a high-regulation or high-speed environment, alignment between your strategy and the regulatory landscape is key. Adapting to the quadrant you’re in, or finding ways to align it more closely with your natural tendencies, will be critical for your company’s success.

Inaction is Not a Rational Choice

AI, in all its forms—whether generative AI, machine learning, or other buzzwords—is rapidly transforming the landscape of what’s possible. Understanding the regulatory environment, you operate in is crucial to aligning your AI strategy, whether you’re an early adopter or a fast follower. The world is changing at a pace that may be uncomfortable, and AI is pushing the boundaries of what we once thought possible. Companies are scrambling to define their place within this expanded frontier.

Some are charging ahead, eager to capitalize on new opportunities, while others are hesitant, wary of the unknown risks. The two-by-two matrix I’ve introduced is designed to provide a rational, strategic framework to help you understand where you stand and how to make informed choices. Remember, inaction is not an option – it’s simply a bad choice as someone passes you by. Being proactive in understanding your identity, your goals, and how to align your business with the changing landscape is essential. Take control of your strategy and position yourself to thrive in this rapidly evolving world.