Navigating the AI Landscape: Choosing the Right Model for Your Needs (Beginners)

TL;DR: Choosing the Right AI Model

Feeling overwhelmed by AI models? You’re not alone! If you’re new to generative AI, the key is just to get started—don’t worry too much about picking the “perfect” model upfront.

Key Takeaways:

✅ AI models process and generate human-like text—they help with writing, coding, customer service, and more.

✅ Major players include: OpenAI (ChatGPT), Anthropic (Claude), Microsoft (Copilot), Google (Gemini), Meta (LLaMA), and Groq.

✅ Choosing the right model depends on:

• Your use case (content creation, analysis, coding, etc.)

• Performance & accuracy (try multiple models and compare results)

• Cost & pricing (pay-as-you-go vs. subscription)

• Integration capabilities (APIs, workflow fit)

• Ethical considerations (bias, privacy, fairness)

✅ Best approach? Experiment with a few top models, compare responses, and build confidence through hands-on use.

AI is evolving fast—jump in, explore, and adapt as you go!

Introduction: The AI Model Dilemma

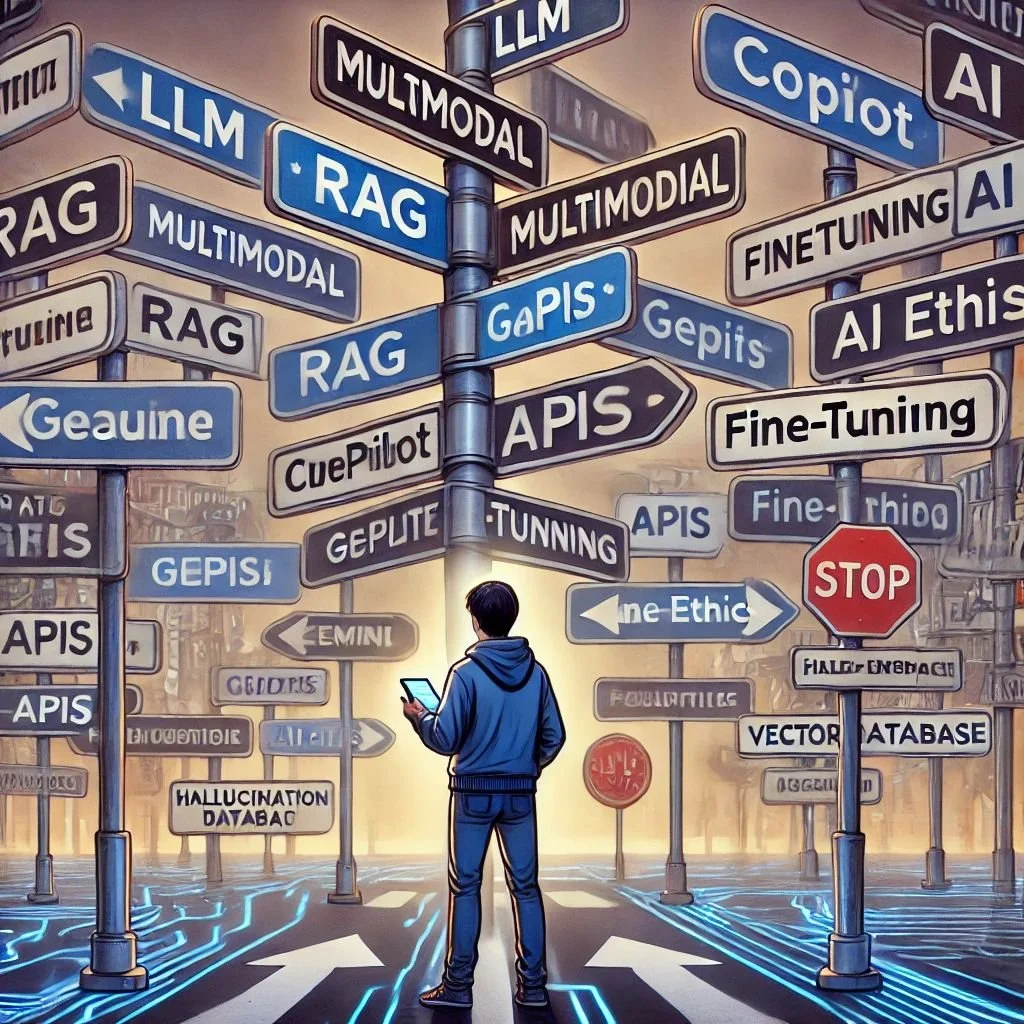

Are you just starting your journey with generative AI and large language models like ChatGPT? If so, you might find yourself asking, Which model should I use? Which one is the best? Where do I go to access these models? Keeping up with AI can feel overwhelming—frankly, a lot overwhelming.

At Savion, we regularly work with seasoned executives—people with 15, 20, or even 30 years of business experience—who now find themselves having to grapple with generative AI and all the rapidly emerging technologies. If they find it overwhelming, then it’s completely normal for you to feel the same way.

This article will break it down for you, making the process more approachable. The key takeaway? Just get started. You don’t need to overanalyze everything upfront. Once you begin experimenting with AI models, you’ll start to develop an intuitive understanding—a mental model—that will help you navigate this space with confidence.

Understanding AI Models

What is a Language Model?

For now, we’re focusing on language models—AI systems that process and generate human-like text. Whether you’re typing a question or speaking into a chatbot, the AI model interprets your input and generates a relevant response.

A language model is a type of artificial intelligence trained on vast amounts of text data. These models recognize patterns, understand context, and generate meaningful responses. Modern Large Language Models (LLMs) can perform various tasks, including answering questions, creating content, analyzing text, and assisting with coding.

Key Players in the Market

Several major players dominate the AI landscape, each offering unique strengths:

OpenAI – Creator of the GPT models, including ChatGPT, known for its conversational capabilities and content generation.

Anthropic – Developer of Claude, an AI assistant focused on safety and ethics.

Microsoft – Offers Copilot, integrated deeply into Microsoft 365 products.

Google – Introduced Gemini, a multimodal AI capable of handling text, images, and code.

Meta (Facebook) – Developed LLaMA, an open-source AI model for customization.

Other notable companies include DeepMind, IBM, Cohere, and Hugging Face, along with various startups pushing AI boundaries.

Why Choosing the Right Model Matters

If you’re just starting, you might hear people debating which model is best and feel lost in the conversation. Initially, these debates don’t matter much—your focus should be on getting hands-on experience with any of the major models. However, as you use AI more in your personal or business life, key factors such as cost, performance, and ethics will become more relevant.

For instance, many people stress over AI model pricing differences—yet if an AI tool saves you two to three hours of work and costs just a few cents, does it really matter if the price fluctuates slightly? The true value lies in the time and efficiency gains.

Factors to Consider When Choosing an AI Model

Even if you don’t have all the answers yet, simply knowing what questions to ask can accelerate your AI journey.

1. Use Case and Specific Requirements

What do you want the AI to do? General conversation, content creation, data analysis?

Do you need multilingual capabilities?

How complex are your tasks?

If you’re unsure, a great starting point is to simply ask the AI: “Hey, I have this task in mind. What do you recommend?” You’ll be surprised by how helpful AI can be in guiding your journey.

2. Performance and Accuracy

Different models excel in different areas. To evaluate:

Try the same question across different AI models and compare responses.

Use evaluation platforms like LLM Stats, LM Arena or Artificial Analysis for benchmark comparisons.

Consider not just accuracy but also contextual understanding—some models perform better at nuanced conversations.

3. Cost and Pricing Models

AI pricing structures vary, and it's essential to balance cost against value:

Per-token pricing (pay-as-you-go) vs. subscription models.

Some AI capabilities are embedded in tools like Notion AI, which require additional subscriptions.

Consider long-term scalability and hidden costs (e.g., API call fees).

4. Integration Capabilities

Think about how AI will fit into your workflow:

Does it offer APIs for easy integration?

Does it work with tools you already use (e.g., Microsoft, Google, or CRM systems)?

Will the provider continue to innovate and support the model long-term?

Many businesses underestimate hidden costs—cultural shifts, training employees, ongoing support, and compliance requirements. These factors often require professional guidance.

5. Ethical Considerations and Bias

You’ll frequently hear discussions about AI ethics, privacy, and bias. If these terms sound unfamiliar, don’t worry—just ask the AI itself: “Tell me about generative AI and hallucinations.”

AI models are built using data influenced by the biases of our world. If you grew up in Africa, your worldview differs from someone raised in the American Midwest. AI reflects these biases—and while some people overemphasize this issue, others ignore it entirely. The key is to strike a balanced approach that aligns with your personal or company values.

Comparing Popular AI Models

While AI models constantly evolve, the top five contenders today are likely to remain industry leaders. Here’s a snapshot of their strengths and best use cases:

A. OpenAI’s GPT Models (ChatGPT)

Strengths: Versatile, widely used, great for general tasks.

Best For: Content creation, coding, customer service, research.

Considerations: Potential for factual inaccuracies (hallucinations).

B. Anthropic’s Claude

Strengths: Strong ethical framework, large context window.

Best For: Document analysis, research, legal and regulatory tasks.

Considerations: More cautious in its responses.

C. Microsoft’s Copilot

Strengths: Seamless integration with Microsoft products.

Best For: Office productivity, Excel analysis, Teams collaboration.

Considerations: Best suited for Microsoft users.

D. Google’s Gemini

Strengths: Multimodal AI (text, images, and code).

Best For: Complex problem-solving, creative applications.

Considerations: Still evolving, requires Google ecosystem.

E. Other Notable Models

Meta’s LLaMA – Open-source model for customization.

Cohere’s AI – Enterprise-focused AI solutions.

Hugging Face Models – Various specialized AI models.

Key Takeaway: Instead of agonizing over which model is best, pick one of the top five and start experimenting. AI is evolving fast, and the best model today might not be the best model tomorrow.

Conclusion: Get Started and Keep Learning

The best way to navigate the AI landscape is to jump in and start using AI models. Once you experience their capabilities firsthand, you’ll develop a better understanding of how they fit into your life and business.

Don’t stress about the “perfect” model—just pick one and start experimenting.

Test different models for different tasks to see what works best.

Stay informed, as AI models evolve rapidly.

By taking a hands-on approach, you’ll build confidence and make more informed decisions as you scale your AI use. Welcome to the AI journey—let’s get started!

Bonus - Glossary Key Terms

LLM (Large Language Model) - A type of AI trained on vast amounts of text data to understand and generate human-like responses. Examples include GPT-4, Claude, and Gemini.

RAG (Retrieval-Augmented Generation) - An AI technique that combines language models with external data retrieval, improving accuracy by pulling in real-time or stored knowledge before generating a response.

Multimodal AI - AI models that can process and generate multiple types of data, such as text, images, video, and audio (e.g., Google Gemini).

Hallucination (AI Hallucination) - When an AI model generates false or misleading information that appears plausible but is incorrect or unsupported by facts.

Fine-tuning - The process of training a pre-existing AI model on a specific dataset to specialize in a particular task or domain.

Prompt Engineering - The practice of crafting effective inputs (prompts) to guide AI models toward generating better responses.

Tokens - Units of text that AI models process. One token can be a short word, part of a longer word, or punctuation. AI models often charge per token.

Embedding - A technique used to convert words, phrases, or entire documents into numerical representations, making them useful for AI processing, search, and clustering.

Zero-shot Learning - An AI’s ability to perform tasks without specific training on that task, relying only on general knowledge from its dataset.

Few-shot Learning - When an AI model improves performance by being shown a few examples before generating responses.

Transformer Model - A deep learning architecture that powers modern LLMs, using self-attention mechanisms to process large amounts of text efficiently.

Neural Network - A computational system inspired by the human brain, used in AI models to process data, recognize patterns, and generate outputs.

Bias in AI - The tendency of AI models to reflect societal biases found in their training data, which can lead to unfair or misleading outputs.

API (Application Programming Interface) - A set of tools that allows developers to integrate AI models into applications, websites, or business workflows.

Autoregressive Model - A model that generates text by predicting one word (or token) at a time based on the previous context.

Temperature (AI Setting) - A parameter that controls the randomness of an AI model’s responses. Lower values make the output more predictable, while higher values introduce more variation.

Context Window - The maximum amount of text an AI model can consider when generating a response. Larger context windows allow models to retain more prior conversation details.

Ethical AI - AI development practices that emphasize fairness, transparency, and reducing harm, ensuring responsible deployment of AI technology.

Synthetic Data - Artificially generated data used to train AI models when real-world data is unavailable, limited, or sensitive.

Self-supervised Learning - A machine learning method where models learn from data without needing labeled examples, improving scalability and efficiency.

Federated Learning - A privacy-focused AI training technique where data remains on user devices, and only insights are shared, reducing data exposure.

Vector Database - A specialized database that stores numerical representations (embeddings) of text, images, or other data types, enabling efficient AI-powered search and retrieval.